AI-Driven MVPs for Product-Market Fit Testing

AI-Driven MVPs for Product-Market Fit Testing

AI-driven MVPs (Minimum Viable Products) are reshaping how startups test ideas. By integrating AI into the early stages of product development, you can validate not just market demand but also whether AI features like automation or prediction genuinely improve outcomes. The key is to start small, focus on one core AI function, and use data to guide decisions.

Here’s the quick takeaway:

- Why AI MVPs? They help you test smarter, faster, and with a leaner budget. AI tools can cut development time by up to 76%, as seen with Treegress's CRM MVP built in just 18 hours.

- How to Start: Define a clear hypothesis like, “If we use AI for [specific issue], then [measurable result].” Use simple AI elements initially, such as off-the-shelf APIs or basic models.

- Costs: A basic AI MVP costs £25,000–£41,000, while enterprise versions can go over £205,000.

- Common Pitfalls: 75% of AI initiatives fail due to unclear goals or unnecessary complexity. Stay focused on measurable outcomes.

- Tools to Use: Platforms like ChatGPT for scripting, Figma for design, and synthetic data tools for testing can speed up and refine your process.

The secret sauce? Don’t overcomplicate. Use AI tools to save time, but keep your goals sharp and your MVP lean. Start with a single, testable AI feature, gather feedback, and refine based on data. This approach ensures you’re building something people actually need - without wasting time or money.

Path to startup: Accelerate your MVP with Generative AI

Defining Your Hypothesis and Target Audience

A solid hypothesis and a clearly defined target audience are the cornerstones of any successful AI-driven MVP. Before diving into code, you need to nail down the problem you're solving and who you're solving it for. As Adrenalin Insights aptly states, "the risk of building the wrong solution remains high... Faster does not automatically mean smarter" [8].

Your hypothesis needs to follow a straightforward structure: "We believe [target users] have a problem with [situation] because [reason]. If we provide [solution], then [outcome] will occur, measured by [metrics]" [8]. Keep your focus sharp by concentrating on one core AI function. The MoSCoW method (Must-have, Should-have, Could-have, Won't-have) can help you weed out unnecessary features [5][10]. When setting metrics, aim for two types: a primary business KPI (like conversion rate or retention) and AI-specific guardrails (such as accuracy, latency, or cost-per-inference) [2].

Creating a Clear Product Hypothesis

A strong hypothesis zeroes in on a specific business or user problem and shows how AI can solve it better than existing methods [5]. But here's the kicker: AI isn't always the right tool. Ask yourself - does the problem involve unstructured data, repetitive decisions, or predictions? If not, AI might not be the best fit [1].

Frame your hypothesis as an "If-Then" statement: "If we apply [AI Capability] to [Specific Data/Process], then [Business KPI] will improve by [X%]" [5]. This forces clarity about what you're testing. For instance: "If we use sentiment analysis on customer support tickets, then ticket resolution time will improve by 30%."

AI tools can also come in handy during the validation stage. Use ChatGPT to draft interview scripts or test scenarios [11]. AI can also help you spot gaps in competitors' offerings and identify where personalised or predictive features could make a difference [2]. Tools like Product Lab AI, Idea Validator, and FeedbackbyAI are excellent for brainstorming and testing ideas [7].

It’s worth noting that 75% of AI MVPs fail to deliver ROI, often due to unclear goals, poor integration, or unreliable data pipelines [5]. And for AI startups, the failure rate is a staggering 90% [5]. A clear, testable hypothesis is your best shot at avoiding these pitfalls.

Once your hypothesis is locked in, the next step is understanding your users.

Building User Personas with AI

After defining your hypothesis, getting a deep understanding of your target audience is essential. AI can speed up this process dramatically, creating detailed user personas in minutes instead of weeks by analysing market data, demographics, behaviours, and pain points [13]. Feed tools like ChatGPT or Personica with specific market data, and they’ll generate profiles that include motivations, frustrations, and behavioural patterns [12].

Start with the "Four Graphics": demographics, psychographics, firmographics, and technographics [14]. These categories form the foundation for effective AI prompts. For example, if you’re targeting small e-commerce businesses, your prompt might look like this: "Generate a persona for a 35-year-old e-commerce founder in the UK, running a business with £500,000 in annual revenue, struggling with customer support response times, and currently using a mix of email and spreadsheets to manage queries."

Skip unnecessary lifestyle details and focus on documented pain points [14]. Natural Language Processing (NLP) tools can also scrape platforms like Reddit or G2 to uncover trends in user complaints [12][15]. For example, if you notice users cobbling together Excel and email to manage a process, that’s a clear sign of a market gap your MVP could address [15].

AI clustering algorithms, such as those in RapidMiner, can segment large datasets to uncover high-value niches that traditional research might overlook [12]. Well-crafted personas can directly guide which AI features will make the most impact.

| Aspect | Traditional User Research | AI-Powered User Research |

|---|---|---|

| Method | Manual surveys and interviews | AI-generated personas and synthetic data [13] |

| Timeline | 2–4 weeks for ~100 responses | Hours for thousands of simulated data points [13] |

| Depth | Limited by human capacity | Deeper insights via large dataset analysis [13] |

| Cost | High (manual labour/incentives) | Low (tool subscriptions) [13] |

One important reminder: AI-generated personas must be validated through real-world interactions [12]. Synthetic personas are great for discovery, but relying solely on them can lead to flawed assumptions. In fact, 42% of failed AI startups cite a lack of market demand as their main downfall [16]. Use AI to speed up research, but always test your ideas with actual users before committing to development.

When real user data is scarce, tools like Gretel or Mostly AI can generate realistic datasets to simulate behaviours like browsing, purchasing, or abandoning carts [13]. This is especially useful for testing your hypothesis before building the full product.

Lastly, don’t try to fix every problem at once. Use AI to group similar complaints and focus your MVP on the most common and pressing issues [15]. This keeps your hypothesis focused and your development process lean.

Building and Testing Your AI-Driven MVP

Once you've nailed down your hypothesis and identified your target personas, it's time to get building. Thanks to AI tools, the gap between idea and execution has shrunk dramatically. What used to take weeks can now often be achieved in just days - or even hours [3]. But don’t let speed compromise clarity. With the "Lean AI" approach, the focus is on starting small - perhaps with a basic classification model - to prove value before scaling up [4]. The goal here isn’t to create something perfect but to gather insights quickly. As Thinslices aptly puts it:

"The goal of an MVP is insight, not elegance. AI tools help you get to that insight faster - often by reducing repetitive work and enabling leaner teams to move sooner" [3].

This mindset lays the groundwork for efficient development and rapid refinement.

AI-driven MVPs also shift the focus of your team. Instead of sweating the details of UI components, backend logic, or testing, you can lean on automation to handle much of the heavy lifting [3]. Take Thinslices’ experiment in early 2025: they developed an inventory management MVP in just 72 hours using a junior front-end developer with no backend experience. By leveraging AI platforms like Lovable.dev and Bolt.new, they built a tool with role-based logins, stock alerts, and auto-decrement logic - without writing custom APIs or backend scaffolding [3]. AI tools can cut down repetitive coding, testing, and documentation time by 100–200% [7].

AI Prototyping Tools

When it comes to prototyping, there are generally three stages: Lo-Fi (for quick conceptualisation), Early Build/MVP (for functional testing), and Production (for scaling and reliability) [6]. For the MVP stage, tools like Figma, Uizard, Lovable.dev, and Bolt.new are incredible time-savers. Figma and Uizard make design faster, while Lovable.dev and Bolt.new can generate functional code, sparing you from writing every line yourself [3][6].

For example, Google AI Studio uses "zero-shot" prompts to spin up functional applications, like Streamlit apps, in seconds [6]. And in late 2025, MobiDev proved the efficiency of AI-assisted coding when they built a CRM MVP for Treegress. The project saved both time and budget, allowing the team to focus on strategic priorities like security and business alignment [7].

To avoid bloated features and keep your launch on track, try using the MoSCoW method (Must-have, Should-have, Could-have, Won’t-have) [10]. Start with a single core AI function and build around it. Tools like GitHub Copilot and LangChain can help with backend logic, while Testim and AgentHub streamline testing [3]. For instance, KPN was able to slash testing time from 2.5 hours per component to just 5 minutes for all components using AI-powered testing tools [3].

Testing with Synthetic Data

Before you unleash your MVP on users, it’s crucial to validate its functionality. Synthetic data can be a lifesaver here. It fills gaps in your existing datasets, benchmarks your model’s performance, and helps avoid frustrating early adopters with buggy outputs [2]. These generators can mimic behaviours like browsing, purchasing, or abandoning carts, giving you realistic datasets to test against [2].

Gartner estimates that nearly 30% of generative AI projects won’t make it past the proof-of-concept stage by the end of 2025, often because they skip this critical validation step [2]. Instead of trying to replicate an entire complex system, focus on testing one high-value workflow end-to-end [2][3]. For instance, if you’re building a recommendation engine, synthetic data can simulate user interactions to see if your AI suggests relevant products.

To ensure consistency, set up a data contract with rules for schema, freshness, and quality [2]. You can also use expert-labelled datasets for benchmarking your synthetic data’s accuracy. Running your AI model in shadow mode or using A/B testing early on can help you spot weaknesses without impacting all users [15]. Shadow mode, for example, lets you run your AI alongside an existing system or manual process to compare results before a full rollout. And don’t forget: standardising and cleaning all data - whether real or synthetic - is key to avoiding errors or biases creeping into your AI [2].

Collecting User Feedback with AI

Once your MVP is up and running, continuous user feedback is vital for improvement. AI-powered tools like chatbots and survey platforms (e.g., FeedbackbyAI) can gather real-time input to validate your business idea [7]. Sentiment analysis tools can also monitor forums, social media, and reviews, flagging trends automatically [8]. For example, if users on Reddit or G2 raise concerns, natural language processing can identify and highlight these issues [8].

A Human-in-the-Loop (HITL) process can be a game-changer here. By having experts review AI recommendations, correct errors, and feed improvements back into the system, your MVP becomes smarter and more reliable over time [2]. Pay close attention to user behaviours - like repeat visits or feature usage - rather than relying solely on surveys, as actions often speak louder than words [8]. On the technical side, track metrics such as model accuracy, latency, inference costs, and error rates to spot any issues [2]. Automated alerts for model drift can also help your team address performance dips as the AI encounters new data [2].

As Adrenalin puts it:

"Speed to learning beats speed to features when AI makes feature development trivial" [8].

To ensure smooth operations, consider using an abstraction layer or AI gateway to manage API calls and monitoring. This way, a vendor outage or pricing change won’t derail your testing [2]. Finally, track both business KPIs - like conversion rates - and AI-specific metrics, such as cost-per-inference and latency. Tools like Hotjar and Mixpanel can help you analyse user interactions and pinpoint those "aha moments" that drive retention [3].

sbb-itb-fe42743

Analysing Results and Improving Your MVP

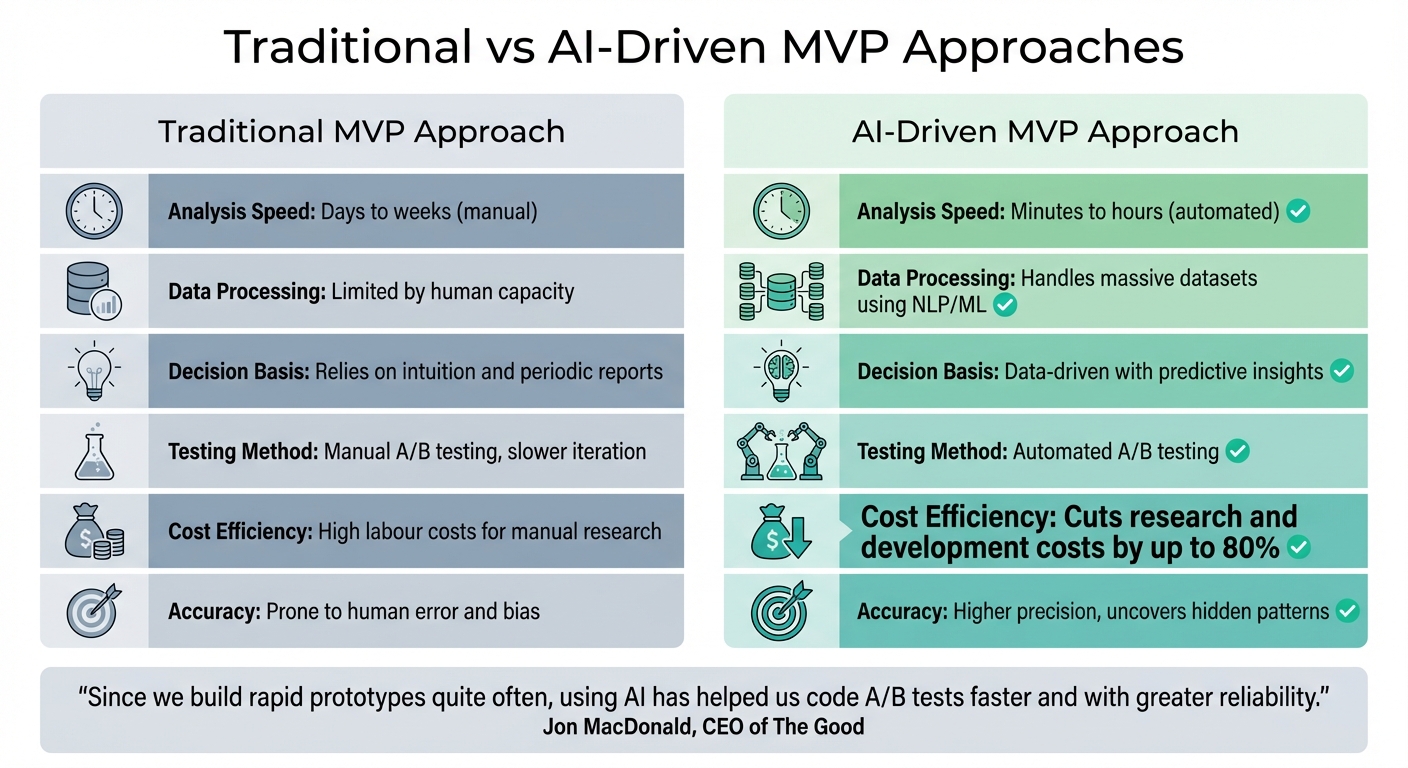

Traditional vs AI-Driven MVP Development: Speed, Cost and Accuracy Comparison

Once you've gathered user feedback, the next challenge is making sense of it. This is where the real magic happens - transforming raw data into decisions that bring you closer to achieving product-market fit. Today, AI tools make this process a whole lot easier. They can sift through mountains of support tickets, reviews, and user interactions in minutes - something that could take weeks if done manually [18]. The trick is spotting patterns that reveal which features are working and which ones are falling flat.

AI Tools for Data Analysis

Modern AI platforms handle much of the heavy lifting when it comes to data analysis. Tools like RapidMiner and DataRobot are great for clustering user behaviours and running predictive analytics. They help you figure out which features keep users coming back and which ones they’re ignoring [12]. If you want to get a feel for how users really feel, sentiment analysis tools like MonkeyLearn and IBM Watson can break down emotions from reviews on platforms like Amazon, Google, and even social media [9].

Take Chime, for example. In 2025, this fintech company used AI to analyse transaction data and user behaviour. The result? A 25% boost in customer satisfaction and a 15% drop in fraud-related issues [20][21]. That’s a big win, and it shows how AI can directly impact both user experience and operational efficiency.

For a deeper dive, semantic clustering tools (think ChatGPT with semantic analysis) can group user feedback to pinpoint recurring pain points [3]. Another metric to keep an eye on is the override rate - how often humans need to correct AI outputs. A high override rate can shine a light on flaws in your product logic [2].

And don’t forget to balance business goals with AI metrics. A handy technique is shadow deployment - running your AI model alongside your current system to compare its predictions with real-world user actions. This approach can help you test changes without fully committing right away. In one case, a global software company used this method to slash Mean Time to Resolution (MTTR) for support tickets by 65%, thanks to an AI system that pinpointed root causes and resolved issues faster [19].

These AI-driven insights aren’t just about speed - they give you a sharper, more detailed view of user behaviour, helping you refine your MVP with confidence.

Standard vs AI-Driven MVP Approaches

Once you’ve got these insights, it’s worth comparing traditional methods of analysis with what AI brings to the table. It’s not just about doing things faster; it’s about doing them smarter. Traditional approaches often rely on manual surveys, periodic reports, and gut instincts. While that can work, it’s slow - sometimes taking days or weeks to get actionable insights [18][20]. On the other hand, AI-driven methods offer continuous monitoring, predictive analytics, and automated A/B testing, delivering insights in just hours [18][20].

Here’s a quick comparison:

| Aspect | Traditional MVP Approach | AI-Driven MVP Approach |

|---|---|---|

| Analysis Speed | Days to weeks (manual) | Minutes to hours (automated) [18][20] |

| Data Processing | Limited by human capacity | Handles massive datasets using NLP/ML [20] |

| Decision Basis | Relies on intuition and periodic reports | Data-driven with predictive insights [20] |

| Testing Method | Manual A/B testing, slower iteration | Automated A/B testing [18][20] |

| Cost Efficiency | High labour costs for manual research | Cuts research and development costs by up to 80% [20][21] |

| Accuracy | Prone to human error and bias | Higher precision, uncovers hidden patterns [20] |

"Since we build rapid prototypes quite often, using AI has helped us code A/B tests faster and with greater reliability. We're able to produce rapid prototypes quickly, increasing our testing volume and rapidly validating hypotheses."

That’s a quote from Jon MacDonald, CEO of The Good [20]. It really captures the essence of what AI can do - speed things up without sacrificing quality. But let’s not forget, while AI is brilliant for generating insights and drafts, human expertise is still essential. You need that human touch to refine ideas and make sure your prototype is more than just flashy “demo-ware” [17].

Case Study: Metamindz's CTO-Led MVP Development

Let’s dive into how a hands-on CTO approach can transform AI-driven MVPs from just an idea into something tangible, ready for the market. This case study highlights how Metamindz, founded by Lev Perlman (Technical Adviser at Google for Startups and Loyal VC), takes a no-nonsense, CTO-led approach to MVP development.

AI MVPs need a rock-solid foundation from the get-go, and that’s where Metamindz stands out. Instead of dealing with project managers or account execs, you work directly with an active CTO. This means you get practical, high-level guidance - think architecture planning, code reviews, and delivery strategies - straight from seasoned system builders.

Here’s why this approach matters: 85% of AI projects fail, often due to poor strategy and governance [17]. On top of that, 42% of AI startups fail because they’re solving problems no one has. Metamindz flips this script by focusing on building MVPs that deliver real business outcomes, not just flashy tech demos. This CTO-led model ensures your MVP isn’t just investor-ready - it’s aligned with actual market needs.

Fractional CTO Services for AI MVPs

Metamindz offers a fractional CTO service (£2,750 per month) that’s all about guiding startups through the tricky early stages - validating your hypothesis, designing a lean architecture, and building a focused MVP. The process kicks off with a deep dive into your tech setup, followed by crafting a scalable architecture and assembling the right team. Unlike traditional agencies that might overcomplicate things, Metamindz keeps it simple, building only what’s necessary to validate your AI’s core functionality.

Why is this approach so effective? Because complexity is often the enemy of progress. In fact, 75% of companies report little to no meaningful returns from AI projects because they overcomplicate instead of focusing on validation [4]. As Monterail puts it:

"Success comes from treating AI like the powerful but unforgiving technology it actually is: requiring clean data, realistic expectations, and proven frameworks that bridge the gap between AI fantasy and business reality." [4]

With Metamindz’s fractional CTO model, every AI feature is tied to a clear, measurable business goal - whether that’s cutting costs, boosting user satisfaction, or testing a new revenue stream. Their UK and Europe-based teams work directly with you, integrating seamlessly into your workflow through shared Slack channels, weekly check-ins, and daily updates. You get a mix of senior engineers and experienced CTOs, ensuring the right balance of strategy and execution.

AI Code Oversight with Vibe-Code Fixes

Now, let’s talk about the code. AI-generated code often looks impressive in demos but can fall apart when faced with real-world demands. That’s where Vibe-Code Fixes comes in. This service ensures your AI-generated code is reliable, compliant, and scalable by refining the UI, handling complex business logic, and properly integrating backend services [17][22].

Here’s the thing: many AI prototypes look great on the surface but lack the robustness needed for production [17]. Without human oversight, AI unpredictability can become a major issue, especially when dealing with privacy regulations like GDPR or ensuring data security. Considering only 30% of AI experiments ever make it to production [4], having a CTO review your AI code can mean the difference between impressing investors with a flashy prototype and actually delivering a product that works.

Technical Due Diligence for MVPs

Before scaling or pitching to investors, you need to make sure your MVP can handle growth. Metamindz’s technical due diligence service (£3,750) thoroughly evaluates your MVP’s scalability, security, and overall technical health. You’ll get a detailed report with actionable recommendations to fix bugs, improve security, and ensure scalability.

This service is crucial, especially since nearly 30% of generative AI projects are expected to fail to move beyond proof-of-concept by 2025 [2]. By focusing on actual model behaviour and user interaction data, the due diligence process shifts your MVP from static mockups to a functional product. It validates the core AI logic early, helping you avoid common pitfalls.

| Service Component | Focus Area | Primary Benefit |

|---|---|---|

| Fractional CTO | Hypothesis & Architecture | Minimises wasted effort and ensures market fit |

| Vibe-Code Fixes | AI Code Oversight | Guarantees reliability and compliance |

| Due Diligence | Scalability & Security | Prepares your MVP for investors and growth |

Conclusion: Achieving Product-Market Fit with AI-Driven MVPs

Reaching product-market fit with AI isn’t about crafting the flashiest MVP - it’s about quickly validating a clear hypothesis and figuring out what actually works. Transitioning from traditional MVPs to AI-driven ones means you’re not just gauging market interest anymore; you’re also testing if the intelligent features you’re building - like automation, personalisation, or prediction - actually deliver better results [2][4]. Monterail sums it up perfectly:

"Building production AI isn't a conversation. It's an engineering discipline" [4].

Take this: AI startups globally raised over £80 billion in 2024 [1]. That’s a huge number, but it doesn’t mean throwing money at AI guarantees success. A leaner approach is to roll out a minimal version of your AI functionality and rely on data to guide your decisions [2][4]. This means tracking not just business metrics like signups or retention, but also AI-specific data - things like latency, accuracy, and cost-per-inference - from the very beginning [2].

While speed is crucial, oversight is just as important. AI tools can slash development time dramatically. For instance, MobiDev managed to deliver a CRM MVP in just 18 hours instead of the usual 130, cutting 76% of the budget in the process [7]. But speed without proper checks can backfire. You need to ensure that the AI-generated code isn’t just flashy but also secure, scalable, and aligned with real business goals - not just impressive demos that crumble under real-world demands.

Once you’ve nailed the basics, it’s all about refining strategically. Set clear metrics to act as guardrails, use shadow deployments to spot weaknesses early, and focus on solving real problems rather than chasing shiny, technical novelties [2][15]. It’s worth noting that nearly 30% of generative AI projects will fail to move beyond proof-of-concept by the end of 2025 [2]. The ones that succeed treat AI like the engineering discipline it is, with solid data pipelines, realistic expectations, and frameworks that have been tested and proven [4].

FAQs

How do AI-driven MVPs help save time and reduce costs during development?

AI-driven MVPs make the development process much faster and more cost-effective. They achieve this by tapping into pre-trained AI models, utilising low-code or no-code platforms, and narrowing the scope to a handful of essential features. What would normally take months can now be done in just a few weeks.

By concentrating on the core functionality and making use of existing AI resources, businesses can keep costs in check - often spending tens of thousands of pounds instead of the six-figure sums typically needed for fully developed products. This streamlined approach is perfect for quickly testing whether a product resonates with the market, all while keeping risks and upfront investment to a minimum.

What mistakes should I avoid when creating an AI-driven MVP?

When creating an AI-driven MVP, there are a few common missteps that can throw you off course. Here's how to steer clear of them:

- Define the problem first. It’s tempting to dive straight into building AI features, but without a solid grasp of the user’s pain points or a clear way to measure success, your MVP risks becoming little more than a flashy tech demo. The goal is to test product-market fit, not just showcase AI capabilities.

- Don’t over-engineer in the beginning. Pouring too much time and money into complex frameworks or large AI models before you’ve validated demand can slow you down and rack up unnecessary costs. Keep things simple until you know you’re on the right track.

- Focus on data quality. AI is only as good as the data it’s trained on. Without proper validation and early checks for security, scalability, and compliance, you could end up with unreliable results - or worse, expose yourself to risks you didn’t anticipate.

The key is to keep your MVP lean and practical. By clearly defining the problem, setting a disciplined scope, and validating early, you’ll create an AI-driven MVP that’s not just functional but also a meaningful step towards finding product-market fit.

How can I effectively validate AI-generated user personas?

To ensure your AI-generated user personas are on point, start by checking if they genuinely reflect real-world behaviours and can accurately predict how users might react to your MVP. Break the persona into specific hypotheses - like demographics, pain points, and preferences - and test these through surveys or interviews. Collect a mix of data: quantitative insights (e.g., completion rates, willingness to pay) and qualitative feedback. This will help you figure out if the persona matches reality or needs tweaking.

Once you've validated the persona, weave it into a low-fidelity AI-driven MVP for testing. Use controlled experiments like A/B testing or cohort analysis to see how it performs. Keep an eye on metrics such as conversion rates, churn reduction, or task completion times. If the results are way off from expectations, it's a sign the persona might need a second look.

Need expert help? Metamindz offers fractional CTO services to audit your data pipelines, review AI models, and make sure you're complying with UK data privacy standards. Their hands-on approach ensures you can build reliable, effective AI-driven MVPs that hit the mark with your audience.