ISO 42001 for AI: Certification Explained

ISO 42001 for AI: Certification Explained

ISO 42001 is the first global standard for managing AI systems responsibly. Published in December 2023, it provides a clear framework for organisations to design, deploy, and maintain AI systems while addressing risks like bias, security issues, and lack of transparency. It’s particularly relevant now, with regulations like the EU AI Act (effective 2025) aligning closely with its guidelines. Certification signals accountability and compliance, offering a structured way to manage AI risks and build trust with clients and stakeholders.

Here’s what you need to know:

- What it covers: Lifecycle management of AI systems - from design to retirement, focusing on transparency, accountability, and risk assessments.

- Who it’s for: Any organisation developing, using, or managing AI tools, from startups to global enterprises.

- Why it matters: Aligns with 70% of EU AI Act requirements, making it a practical way to demonstrate compliance and avoid legal headaches.

- Process: Takes 6–12 months, costs £60,000–£160,000 initially, and requires annual audits to maintain certification.

- Success stories: Microsoft, Whistic, and Synthesia have already achieved certification, boosting their credibility and market positioning.

If you’re already ISO 27001 certified, good news - there’s a 60% overlap in controls, which can save effort. Certification isn’t just a box-ticking exercise; it’s about embedding responsible AI practices into your operations. Want to stay ahead of the curve? Start with a gap analysis and get leadership on board.

ISO 42001 Basics: What It Is and How to Get Certified Fast

sbb-itb-fe42743

What is ISO 42001?

ISO/IEC 42001:2023 is the first-ever international standard tailored specifically for Artificial Intelligence Management Systems (AIMS). Released in December 2023, it provides organisations with a structured way to oversee AI systems throughout their lifecycle - from initial design to deployment and eventual retirement [4].

Unlike standards that focus only on technical aspects, ISO 42001 takes a management system approach. This means it doesn’t just tackle technical issues but also addresses organisational gaps like unclear responsibilities and weak oversight [1].

The standard is built on the Annex SL high-level structure, making it easy to integrate with other well-known management systems like ISO 27001 (Information Security) and ISO 9001 (Quality Management).

A key feature of ISO 42001 is its risk-based approach. Organisations are required to perform impact assessments and identify risks unique to AI, such as algorithmic bias, model drift, and unintended outcomes. The standard is flexible and can be applied to organisations of all sizes, from small startups to global corporations, as well as public sector bodies. It spans 51 pages and costs CHF 225 (around £200) [4][5].

Below, we’ll explore the purpose of ISO 42001 and why organisations should consider certification.

The Purpose of ISO 42001

ISO 42001 was created to tackle the distinct challenges posed by AI systems. These include issues like algorithmic bias, lack of transparency (often referred to as the "black box" problem, especially with advanced AI models like Large Language Models), model drift (when AI performance declines over time), and risks from increasingly autonomous AI systems.

The standard strikes a balance between fostering innovation and ensuring safety. It offers a governance framework to manage these risks while still supporting efficiency and technological progress. What’s particularly noteworthy is how it shifts the conversation from vague ideas of "responsible AI" to concrete, auditable governance practices [1]. This makes it highly relevant for organisations looking to stay compliant with emerging regulations.

ISO 42001 also aligns well with new legal frameworks. For instance, about 70% of its control areas overlap with the EU AI Act, which came into effect in 2025 [3]. It uses the "Plan-Do-Check-Act" (PDCA) methodology found in other ISO standards, covering the entire AI lifecycle [4].

Who Needs ISO 42001 Certification?

ISO 42001 benefits both organisations that develop AI systems and those that deploy or use them by providing clear guidelines on accountability and oversight.

The standard is relevant to any organisation involved in developing, offering, or using AI systems. This includes companies creating AI products, businesses integrating AI into their operations, organisations using AI for decision-making, and those managing third-party AI tools.

- For AI developers, the standard ensures accountability in areas like model training and data sourcing.

- For deployers, it offers frameworks for ongoing performance monitoring and human oversight.

Certification is particularly useful for organisations aiming to demonstrate responsible AI practices, especially in enterprise sales. It’s becoming a common requirement in Request for Proposal (RFP) processes [3].

Many leading companies have already obtained certification, highlighting its importance. It’s especially crucial for organisations operating in or selling to the EU, where the AI Act mandates compliance for high-risk AI systems. Achieving ISO 42001 certification helps demonstrate due diligence and provides strong evidence of having the right governance structures in place, which is vital in regulated markets.

Core Components of ISO 42001

ISO 42001 revolves around three key elements that together create a structured framework for governing AI systems. If your organisation is considering certification, getting a handle on these components is a must.

AI Management Systems (AIMS) Integration

An AI Management System (AIMS) acts as the backbone for managing AI systems, covering everything from design to retirement. It weaves AI governance into your existing processes, making roles and responsibilities clearer and boosting oversight.

The framework is built on the Plan-Do-Check-Act (PDCA) cycle, which ensures that your governance evolves alongside AI technology. One of the standout features of AIMS is its compatibility with other standards. For instance, if you're already certified under ISO 27001, you can integrate AIMS by reusing shared controls, saving both time and money in the process.

"Responsible AI is now a business imperative. ISO 42001 provides organisations with a clear, certified pathway to operational excellence, risk mitigation, and market credibility." - José Fernando Guzmán, CER Digital Director, Bureau Veritas Global [6]

To get started, conduct a thorough AI discovery phase to identify all systems in use, including any "shadow AI" that may be flying under the radar. Set up a cross-functional governance committee - not just a tech team - bringing in experts from legal, HR, and ethics to ensure well-rounded oversight. Alongside AIMS, it's crucial to carry out regular risk and impact assessments to stay ahead of potential AI-related issues.

Risk and Impact Assessments

Once your AIMS is in place, the next step is ongoing risk and impact assessments, which are central to Clause 6 (Planning) in ISO 42001. These assessments aren't just a one-and-done activity - they're an ongoing process that spans the entire AI lifecycle, from the initial idea to system retirement.

The focus here is on understanding and mitigating risks such as algorithmic bias, lack of transparency, safety concerns, and unintended consequences. While traditional IT risk frameworks are a starting point, you'll need to expand them with an AI-specific lens, addressing issues like fairness, model drift, and unexpected behaviours.

The AI Impact Assessment (AIIA) is a tool designed to evaluate how AI systems affect people and society. It considers factors like fairness, privacy, and even environmental effects. These assessments also serve as proof of due diligence, which is particularly handy since ISO 42001 aligns with around 70% of the EU AI Act's requirements [3].

To put this into practice, set up systems to continuously monitor AI performance, looking for issues like accuracy drops or model drift. Define thresholds that trigger manual reviews or automated alerts. Don't forget to include AI systems provided by third parties or vendors - your organisation is ultimately responsible for any risks they introduce.

Reference Controls (Annex A)

Annex A is where things get practical. It includes 38 specific reference controls spread across 9 sections [2][7]. These controls give you a hands-on guide for managing AI risks, ensuring data quality, and overseeing system lifecycles, including areas like security, governance, and data protection.

A key requirement is creating a Statement of Applicability (SoA). This document explains which controls apply to your organisation and why, offering flexibility to adapt the standard to your unique needs.

The controls cover every stage of AI governance, from sourcing data and training models to monitoring deployments and planning retirements. If you're already familiar with ISO 27001, you'll recognise many of these controls, which can make implementation a smoother ride.

Steps to Achieve ISO 42001 Certification

ISO 42001 Certification Process Timeline and Costs

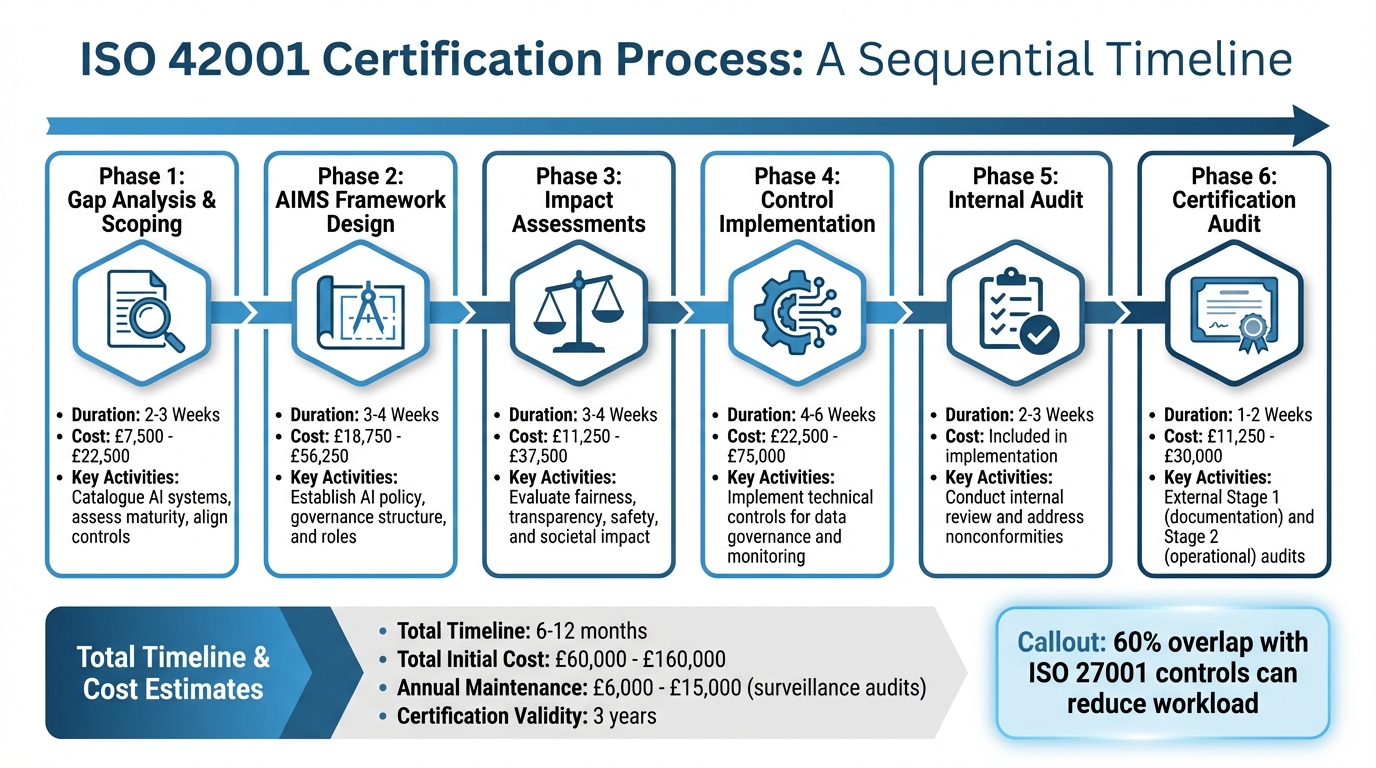

Getting ISO 42001 certification is no small feat - it’s a structured process that usually takes 6 to 12 months [2]. It’s split into three main phases: preparation, implementation, and external audits. Each phase builds on the last, so rushing through the early steps can lead to trouble down the line.

Preparation and Gap Analysis

The first step is a gap analysis. This is where you take a good, hard look at your current AI practices and compare them to the ISO 42001 requirements. Think of it as figuring out where you are now and what needs fixing. You’ll also need to define your AIMS (AI Management System) scope and identify all the AI systems in use - including any unofficial or “shadow” AI tools being used by teams.

Creating a complete AI inventory is a must. This means listing everything: in-house models, vendor-provided tools, and off-the-shelf software. If you already have certifications like ISO 27001 or ISO 9001, you might save some time here by reusing existing documentation and processes where there’s overlap.

This phase usually takes 2–3 weeks and costs between £7,500 and £22,500 [3]. During this time, you’ll need leadership buy-in, a dedicated AI governance lead, and a cross-functional team with folks from legal, HR, ethics, and data science [3].

Implementing AI Management Systems

Once you’ve identified the gaps, the next step is building your AIMS. This involves designing a framework, which takes about 3–4 weeks, followed by 4–6 weeks for rolling out technical controls [3]. The framework should cover the entire AI lifecycle - from initial development to deployment, monitoring, and eventual retirement - while sticking to the PDCA (Plan-Do-Check-Act) cycle.

Start by setting up a clear AI policy and governance structure. Define roles, create risk methodologies to tackle AI-specific issues like algorithmic bias and model drift, and document everything in a Statement of Applicability (SoA). This document explains which of the 38 Annex A controls apply to your organisation and why certain ones might not [7].

Costs for this phase range from £18,750 to £56,250 for the framework and an additional £22,500 to £75,000 for technical controls [3]. Automating monitoring systems to flag issues like bias or performance dips can make compliance easier and keep you audit-ready.

AI Impact Assessments (AIIAs) are a key part of this stage. These assessments check for fairness, transparency, safety, and broader societal impacts. Each assessment takes about 3–4 weeks per major system and costs between £11,250 and £37,500 [3]. Be sure to document any fairness trade-offs and how you defined fairness during model development - this will be scrutinised during audits.

Once your AIMS is fully set up and documented, you’re ready to move to the external audit phase.

External Audits and Certification

The final step is the external audit, performed by an accredited certification body. This happens in two stages: Stage 1 reviews your documentation and policies, while Stage 2 checks whether your controls are actually working [2][3]. These audits together take 1–2 weeks and cost between £11,250 and £30,000 [3].

Before the official audit, you’ll need to complete an internal audit and a management review to catch any issues. Think of it as a dress rehearsal - it’s better to find problems yourself than have them flagged during the formal audit.

Once certified, the certification is valid for 3 years, but you’ll need to pass annual surveillance audits to keep it [2][3]. These yearly audits cost £6,000 to £15,000 [3] and ensure your AIMS stays effective as your AI systems evolve.

| Certification Phase | Estimated Duration | Key Activities |

|---|---|---|

| Gap Analysis & Scoping | 2–3 Weeks | Catalogue AI systems, assess maturity, and align controls [3] |

| AIMS Framework Design | 3–4 Weeks | Establish AI policy, governance structure, and roles [3] |

| Impact Assessments | 3–4 Weeks | Evaluate fairness, transparency, safety, and societal impact [3] |

| Control Implementation | 4–6 Weeks | Implement technical controls for data governance and monitoring [3] |

| Internal Audit | 2–3 Weeks | Conduct an internal review and address nonconformities [3] |

| Certification Audit | 1–2 Weeks | Undertake external Stage 1 (documentation) and Stage 2 (operational) audits [3] |

Benefits and Challenges of ISO 42001 Certification

ISO 42001 certification offers clear advantages for organisations but also comes with its own set of hurdles. Balancing these aspects is key to determining whether pursuing certification aligns with your organisation's goals and resources.

Benefits of Certification

One of the standout benefits is regulatory readiness. Certification gives organisations a structured framework to meet changing legal requirements, such as those under the EU AI Act. This proactive approach not only reduces the risk of penalties but also avoids the chaos of last-minute compliance fixes.

Another major upside is building trust with stakeholders. Certification sends a strong message to customers, investors, and employees that your organisation is committed to ethical AI practices. For example, in 2024, Synthesia became the first AI video company to achieve ISO 42001 certification. This milestone helped them strengthen relationships with over 55,000 business clients, including half of the Fortune 100. Martin Tschammer, Synthesia's Head of Security, highlighted the impact:

"A-LIGN's expertise and attention to detail helped us identify and remediate any gaps in our rigorous processes. Together, we have led the way for the rest of the industry in the adoption of this standard, fostering trust and ensuring the long-term success of AI development and use."

Certification also provides a competitive edge. Major players like Microsoft and Google already hold this certification, and it's increasingly becoming a requirement for working with enterprise clients. Early adopters can stand out in the market and unlock new business opportunities. A great example is Munich-based legal tech firm top.legal, which achieved certification in just 12 weeks in early 2025. This paved the way for them to secure a strategic enterprise deal that required certification.

Operationally, certification can streamline processes. By formalising AI usage through an AI Management System (AIMS), organisations can improve resource management, enhance data quality, and boost system reliability. It also helps identify hidden risks, such as unauthorised "shadow AI" tools, and reduces inefficiencies.

| Benefit | Impact | Example |

|---|---|---|

| Regulatory Alignment | Simplifies compliance with new AI laws | Avoids last-minute compliance efforts |

| Market Differentiation | Builds trust and strengthens competitive position | Synthesia built trust with Fortune 100 clients |

| Operational Efficiency | Improves data quality and AI lifecycle processes | top.legal secured enterprise contracts through certification |

| Risk Reduction | Identifies hidden risks like bias and shadow AI | Reduces vulnerabilities in ad hoc AI usage |

However, these benefits don’t come without challenges.

Challenges in Implementation

The certification process requires significant resources. It can take six to twelve months to complete, needing dedicated staff, detailed documentation, and ongoing costs for surveillance audits.

Strong leadership commitment is another essential factor. Top management must actively support the initiative by setting AI policies and objectives. Without this backing, resource allocation can falter, and regular AI risk assessments may be neglected.

Another hurdle is continuous monitoring. AI systems aren’t static - they evolve, which means organisations must regularly check for issues like model drift, bias, and performance changes. Setting up automated alerts and real-time monitoring systems adds technical complexity but is necessary to stay audit-ready.

The pioneering nature of ISO 42001 also brings a steep learning curve. Organisations often need external help or automation tools to manage the complexities of AI governance. For instance, top.legal used the trail AI Governance Suite to automate 80% of the manual work involved in drafting AI policies and risk assessments, making the process far more manageable.

| Challenge | Mitigation Strategy |

|---|---|

| Resource Constraints | Use automation tools for monitoring and evidence collection |

| Leadership Awareness | Position certification as a strategic advantage |

| Integration Complexity | Map ISO 42001 controls to existing frameworks like ISO 27001 |

| Continuous Monitoring Needs | Automate alerts for tracking model drift and performance |

| Evolving Regulations | Use ISO 42001 as a flexible framework aligned with global standards |

To navigate these challenges, organisations should start with a gap analysis, secure executive buy-in, and phase the implementation to ease resource demands. Smaller companies might focus on meeting essential compliance needs first, gradually expanding their governance framework as they grow.

Conclusion

ISO 42001 is reshaping how organisations approach AI governance. Instead of vague promises, it offers a clear, auditable framework that tackles common organisational pitfalls like unclear accountability, weak oversight, and gaps in data governance [1].

With the EU AI Act now in motion, ISO 42001 not only helps organisations stay ahead of regulatory requirements but also builds credibility with customers, partners, and investors. As Danny Manimbo, Managing Principal of Schellman's ISO and AI services, puts it:

"ISO 42001 serves as the world's first international management system standard dedicated specifically to AI" [1].

For businesses serious about managing AI responsibly, this makes ISO 42001 an obvious starting point.

To get started, organisations should conduct a gap analysis to pinpoint areas needing improvement, define which AI systems fall under the standard's scope, and secure leadership buy-in with CTO-led tech due-diligence. If you're already certified under ISO 27001, you're in luck - about 60% of your existing controls can be reused [3], which significantly reduces the workload. On average, certification takes about six to twelve months [2], with initial costs typically ranging from £60,000 to £160,000 [3]. Once in place, this framework lays the groundwork for ongoing oversight of AI systems.

Certification isn’t a one-and-done deal, though. It requires a long-term commitment. The process follows a three-year cycle, starting with an initial audit and followed by annual surveillance reviews [2]. To stay compliant, organisations need to monitor for things like model drift, keep detailed documentation of the AI lifecycle, and establish a cross-functional governance team that includes legal, ethics, HR, and data science experts [3].

For companies developing or using AI systems - including tools like Large Language Models or agentic AI - ISO 42001 is quickly becoming the industry benchmark. Early adopters are gaining an edge in enterprise procurement, while those who delay risk missing out on opportunities where certification becomes a must-have.

FAQs

Do we need ISO 42001 if we only use third-party AI tools?

ISO 42001 remains highly relevant even when you're using third-party AI tools. It offers a solid framework for managing AI responsibly, covering governance and risk management throughout the AI lifecycle. Whether your systems are built internally or sourced from external providers, this standard helps ensure both compliance and accountability, no matter where your AI tools come from.

What evidence do auditors expect for bias, drift, and monitoring controls?

Auditors look for clear evidence that bias, drift, and monitoring controls are being actively managed. This means having well-documented processes, carrying out regular evaluations, and ensuring practices are transparent. These steps are essential to keeping AI systems ethical, reliable, and compliant at every stage of their lifecycle. Key actions include keeping detailed records, scheduling periodic reviews, and setting up strong monitoring systems to catch and address issues early.

How do we scope an AIMS when AI is used across multiple teams and products?

To design an Artificial Intelligence Management System (AIMS) that works across multiple teams and products, it's important to think big - look at the organisation as a whole and cover every stage of the AI lifecycle. Start by setting clear policies that everyone can follow, and make sure roles and responsibilities are well-defined. This helps avoid confusion and keeps everyone on the same page when it comes to governance.

You’ll also need to dig into potential risks and gaps. Conduct thorough risk assessments and gap analyses to uncover areas where your organisation might be vulnerable or falling short on compliance. This step is crucial for ironing out issues before they become major problems.

Don’t forget to include ethical considerations in your plan. How will your AI systems impact users, employees, or society? Combine this with a solid strategy for managing data and monitoring the lifecycle of your AI systems. This approach ensures that your organisation handles AI responsibly and consistently, no matter how many teams or products are involved.