Top Tools for AI Bias Detection

- Easy Tools to Make AI Fair for Everyone!

- How to Choose AI Bias Detection Tools

- 1. Trinka AI

- 2. IBM AI Fairness 360

- 3. Fairlearn

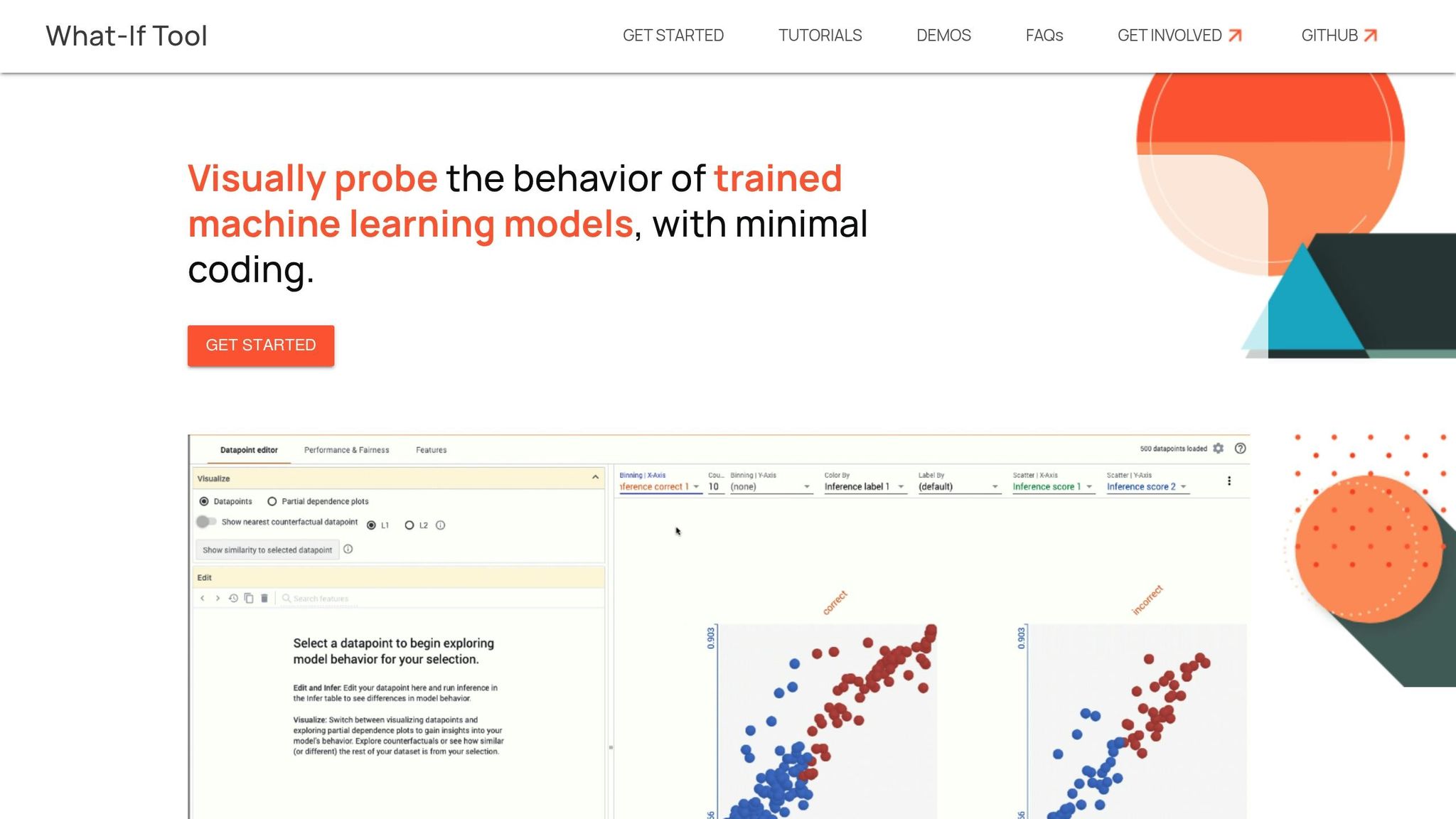

- 4. Google What-If Tool

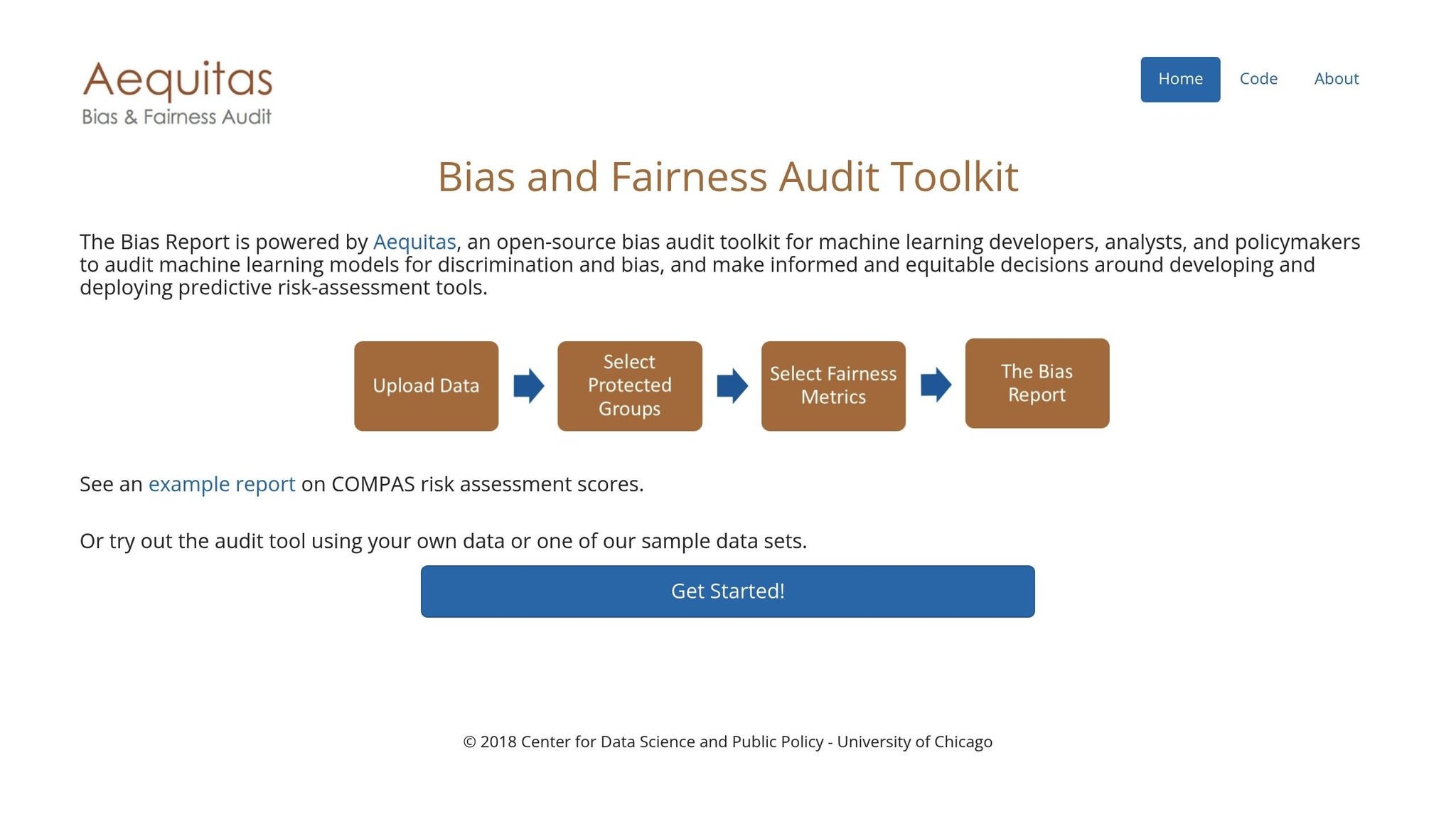

- 5. Aequitas

- 6. Microsoft Fairness Dashboard

- Tool Comparison Chart

- How to Implement These Tools

- Conclusion

- FAQs

- How do AI bias detection tools help businesses comply with U.S. laws like the EEOC and FCRA?

- What are the main differences between open-source and enterprise AI bias detection tools, and how do these affect implementation?

- What are the best ways to integrate AI bias detection tools into existing workflows for ongoing monitoring and improvement?

Top Tools for AI Bias Detection

AI bias can harm communities, violate U.S. laws, and damage business reputations. To address this, several tools help detect and reduce bias in AI systems. These tools analyze data and algorithms for issues like demographic bias, intersectional bias, and performance disparities. Here's a quick overview of six tools:

- Trinka AI: Focuses on bias in text-based outputs, identifying discriminatory language and cultural missteps.

- IBM AI Fairness 360: Open-source toolkit covering bias detection across machine learning stages with various fairness metrics.

- Fairlearn: Open-source tool emphasizing specific fairness concerns, offering detailed metrics and visual tools.

- Google What-If Tool: Interactive tool for exploring model fairness and performance without coding.

- Aequitas: Audit toolkit designed for intersectional bias analysis using fairness metrics.

- Microsoft Fairness Dashboard: Enterprise-focused tool integrated with Azure, offering detailed bias insights and compliance support.

Each tool has unique strengths, like dataset compatibility, integration options, and reporting features. The right choice depends on your specific needs, technical setup, and compliance requirements. Below is a quick comparison:

Quick Comparison

| Tool | Focus Areas | Integration | Best For | Limitations |

|---|---|---|---|---|

| Trinka AI | Text-based bias detection | NLP workflows, APIs | Content creation, NLP apps | Limited to text applications |

| IBM AI Fairness 360 | Comprehensive bias metrics | Python, ML frameworks | Enterprise audits, research | Steep learning curve |

| Fairlearn | Specific fairness concerns | Python, Azure ML | Research, Python projects | Requires ML knowledge |

| Google What-If Tool | Interactive model analysis | TensorFlow, Keras | Debugging, education | Tied to Google ecosystem |

| Aequitas | Intersectional bias audits | Python, command-line | Policy research, compliance | No real-time capabilities |

| Microsoft Fairness Dashboard | Enterprise bias monitoring | Azure ML, ML frameworks | Enterprise, compliance | Requires Azure ecosystem |

To implement these tools effectively:

- Build a cross-functional team (data scientists, legal, HR, etc.).

- Maintain an AI inventory and schedule regular audits.

- Train your team on tool usage and ethical AI principles.

- Align efforts with U.S. regulations, such as EEOC and FCRA requirements.

Easy Tools to Make AI Fair for Everyone!

How to Choose AI Bias Detection Tools

When tackling the challenges of AI bias, selecting the right detection tool is a critical step. A well-chosen tool can help safeguard your organisation against legal troubles and reputational harm. To make an informed decision, consider the following key factors.

Bias Detection Capabilities should top your list. Look for tools capable of analysing multiple protected attributes at the same time, such as race, gender, age, and socioeconomic status. The most effective tools also identify intersectional bias - bias that affects individuals belonging to overlapping protected groups. For instance, they should flag differences in how systems treat individuals who fall into multiple categories, like women of a specific racial background.

Dataset Compatibility is another crucial factor. The tool must work seamlessly with your existing data infrastructure. Some tools are built for structured data, like spreadsheets and databases, while others are designed for unstructured formats, such as text, images, or audio. Think about your data's format, volume, and storage setup. Cloud-based tools often scale well but may raise concerns about data security. On-premises solutions offer more control but require more technical resources to manage.

Transparency and Explainability are essential for understanding how the tool identifies bias. The best tools provide clear explanations of their methods and generate detailed reports on their findings. This level of transparency is invaluable when presenting results to stakeholders, regulators, or legal teams. Avoid tools that operate as "black boxes", delivering results without revealing their underlying processes.

Integration Requirements can make or break your implementation. Assess how easily a tool fits into your current AI workflow. Some tools integrate smoothly with popular frameworks like TensorFlow or PyTorch, while others may require extensive code modifications. Consider your team's technical expertise and the time you can dedicate to implementation. Tools with robust APIs and well-documented guides can help streamline this process.

U.S. Compliance Standards should be a priority for businesses operating in the United States. The tool you choose must help you meet regulations like those enforced by the Equal Employment Opportunity Commission (EEOC) and the Fair Credit Reporting Act (FCRA). Industry-specific requirements also matter - financial services must account for Fair Lending laws, while healthcare organisations need to ensure HIPAA compliance. Verify that the tool can produce the necessary audit trails and documentation for regulatory purposes.

Scalability and Performance become critical as your AI systems expand. A tool that works well with small datasets may falter when faced with enterprise-level demands. Think ahead to your future needs and opt for solutions that can handle larger datasets and complex AI models. Cloud-based tools often provide better scalability, but they may come with ongoing subscription costs.

Cost Structure is another factor to weigh carefully. Open-source options like Fairlearn have no licensing fees but require significant technical expertise to implement and maintain. On the other hand, enterprise platforms come with higher costs but typically include support and training. When calculating the total cost of ownership, factor in implementation, training, and maintenance expenses. Keep in mind that the financial impact of bias-related lawsuits or regulatory penalties can far outweigh the cost of the tool itself.

Support and Documentation play a significant role in your success with any tool. Look for vendors that offer detailed training materials, responsive technical support, and active user communities. Given the complexity of bias detection, expert guidance during both implementation and ongoing use is often necessary.

Vendor Stability and Roadmap are worth considering for long-term planning. Opt for tools from established vendors with a clear vision for future development and strong financial backing. The field of AI bias detection evolves rapidly, so choose a vendor that can keep up with new regulations and technological advancements.

For new AI projects, it's best to select tools that can integrate directly during the development phase. For existing systems, focus on solutions that can quickly identify and address bias without disrupting operations. These criteria will help you evaluate and choose the right tool for your needs.

1. Trinka AI

Trinka AI is designed to uncover biases in AI-generated language, focusing on both cultural and contextual nuances. Using advanced natural language processing, it scrutinizes text-based outputs to spot discriminatory language, cultural missteps, and misunderstandings that could unfairly affect certain demographic groups. By examining subtle linguistic details and cultural references, it flags potential issues before they reach the end user.

One of Trinka AI's strengths is its ability to integrate smoothly into existing natural language processing workflows and widely used AI frameworks. Additionally, its reporting system stands out for its transparency, offering detailed explanations of the biases it detects. This feature is particularly helpful for organisations that require thorough documentation to meet compliance standards. With its sophisticated language analysis, Trinka AI goes beyond surface-level assessments, providing a deeper layer of bias detection.

2. IBM AI Fairness 360

Expanding on the insights into linguistic biases discussed with Trinka AI, IBM AI Fairness 360 tackles bias detection across the entire machine learning pipeline. This open-source toolkit is designed to identify and address bias at every stage of the machine learning process. By offering a range of fairness metrics and bias mitigation algorithms, it helps improve fairness during pre-processing, in-processing, and post-processing stages.

Detecting Bias in Cultural and Contextual Contexts

The toolkit is well-equipped to spot group-level fairness issues, such as breaches of demographic parity and equalized odds, which may point to cultural biases embedded in training data. It also takes into account intersectional fairness by analysing how multiple protected attributes interact, aligning with earlier discussions on cultural and contextual bias. Additionally, it measures individual fairness alongside group fairness, providing a deeper understanding of how historical or cultural assumptions in the data can impact predictions - especially for underrepresented groups.

Compatibility Across Datasets and Languages

IBM AI Fairness 360, built using Python, works seamlessly with popular frameworks like scikit-learn, TensorFlow, and PyTorch. Its modular structure allows teams to integrate specific components into their existing systems without requiring a complete overhaul. This flexibility makes it easier to work with a wide variety of datasets while ensuring reliable bias evaluation.

Clear and Accessible Detection Methods

The toolkit includes detailed documentation that explains its fairness metrics and bias mitigation techniques. This level of transparency ensures that all stakeholders - whether technical or non-technical - can understand the bias assessments and make informed decisions about how to address them.

Integration with AI Development Workflows

IBM AI Fairness 360 integrates smoothly into current AI development processes. By automating bias testing alongside standard model validation, it supports continuous monitoring of fairness metrics, making it easier for teams to maintain fairness throughout the lifecycle of their AI systems.

3. Fairlearn

Fairlearn takes a unique approach by shifting the conversation from general bias to specific fairness concerns. This open-source toolkit offers a structured way to evaluate and address unfairness in machine learning systems, using detailed metrics and visual tools to guide fairness assessments.

Measuring Fairness Across Groups

Fairlearn uses disparity and group metrics to evaluate fairness by identifying deviations from parity. It provides detailed summaries of minimum and maximum values across sensitive groups, offering a clear picture of how fairness is distributed[1].

Instead of relying on broad terms like "bias" or "debiasing", Fairlearn focuses on specific harms, such as allocation harms and quality-of-service harms[1]. This targeted approach helps teams pinpoint and address the exact fairness issues in their systems.

Clear and Transparent Methodology

Fairlearn doesn't just detect fairness issues - it ensures the results are easy to understand. By aligning real-world problems with machine learning tasks, including target variables, features, and fairness constraints, it emphasizes construct validity[1]. Its framework includes elements like face validity, content validity, and consequential validity to ensure that the metrics truly reflect the intended fairness goals[1]. This thoughtful approach enhances the transparency and reliability of its methodology.

"If your data isn't diverse, your AI won't be either." - Fei-Fei Li, Co-Director of Stanford's Human-Centered AI Institute[4]

Additionally, Fairlearn provides visualizations that highlight the trade-offs between fairness and accuracy, offering a practical way to balance these priorities[2][3].

Seamless Integration with AI Workflows

Fairlearn is designed to work effortlessly with Python's scikit-learn pipelines, making it easy to incorporate into existing AI development workflows[2]. This compatibility allows teams to quickly integrate Fairlearn into their broader strategies for ensuring fairness in machine learning systems.

4. Google What-If Tool

Google's What-If Tool (WIT) takes a hands-on approach to examining machine learning models, offering an interactive way to explore fairness, performance, and explainability - all without needing to write any code[5]. As an open-source tool, it works seamlessly with TensorBoard and supports models built using TensorFlow, Keras, and other widely used frameworks[5][6]. Its user-friendly interface makes it easier to spot fairness concerns, especially those tied to cultural or contextual factors, helping users better understand and refine their models.

sbb-itb-fe42743

5. Aequitas

Aequitas takes a closer look at the issue of intersectional bias in machine learning models. Developed by the University of Chicago's Center for Data Science and Public Policy, this open-source audit toolkit helps data scientists and machine learning engineers assess bias using various fairness metrics. These include demographic parity, equalized odds, and calibration measures, which evaluate how algorithms impact different demographic groups.

What sets Aequitas apart is its ability to uncover intersectional bias - bias that affects individuals at the intersection of multiple demographic categories. While aggregated metrics can sometimes hide these disparities, Aequitas digs deeper to reveal more complex patterns of bias in datasets.

Transparency and Explainability of Detection Methods

Aequitas prioritizes transparency by offering detailed documentation for its fairness metrics and bias detection processes. Each metric is clearly explained, making it easier for both technical experts and non-technical stakeholders to understand the results. The toolkit also generates visual reports that simplify these complex metrics, bridging the gap between data science teams and decision-makers. Its open-source design allows organisations to review and customise the algorithms to suit their specific needs.

Integration with Existing AI Development Pipelines

Designed as a Python library with a command-line interface, Aequitas integrates effortlessly into standard data science workflows. It works well with widely used frameworks like scikit-learn and pandas and supports batch processing for bias audits. Teams can even automate bias detection within CI/CD pipelines, making it a natural part of their model validation processes. This ease of integration makes Aequitas a valuable tool for maintaining fairness in AI systems.

6. Microsoft Fairness Dashboard

Microsoft Fairness Dashboard provides a focused solution for identifying and addressing bias in machine learning models, seamlessly integrated with Azure. Designed to cater to both technical teams and business stakeholders, it simplifies bias monitoring and helps maintain fairness throughout the AI development process. Its integration with Azure Machine Learning ensures organisations can manage fairness efficiently across their AI workflows.

Bias Detection Capabilities

The dashboard offers detailed insights into model performance across various demographic groups, using fairness metrics like demographic parity, equalized odds, and equal opportunity. These tools help teams identify specific areas where models may not perform equitably. Its Azure integration allows for large-scale bias monitoring within cloud-based machine learning workflows, making it a strong choice for enterprise use.

Transparency and Explainability

To ensure clarity, the platform includes visual charts and graphs that break down fairness metrics in an easily digestible way. This feature allows non-technical stakeholders to understand the findings and contribute to addressing any issues. By prioritising transparency, the dashboard fosters collaboration between technical and business teams.

Integration with AI Development Pipelines

The dashboard connects directly with Azure Machine Learning pipelines and supports popular frameworks like scikit-learn, PyTorch, and TensorFlow. This setup allows teams to automate bias monitoring as part of their model validation processes. Alerts can be triggered when fairness metrics drop below acceptable levels, enabling a proactive approach to maintaining fairness throughout the AI lifecycle.

Regulatory Compliance

Microsoft Fairness Dashboard also helps organisations document their bias detection and mitigation efforts. These reporting tools are valuable for meeting evolving U.S. regulatory requirements around AI fairness and accountability. This feature makes the dashboard an essential tool in the broader landscape of bias detection and mitigation solutions.

Tool Comparison Chart

Below is a concise comparison chart summarizing the key features and limitations of various AI bias detection tools. The right choice will depend on your specific goals, technical setup, and organisational priorities.

| Tool | Primary Strengths | Bias Detection Types | Integration Support | Best For | Key Limitations |

|---|---|---|---|---|---|

| Trinka AI | Focused on natural language processing with real-time detection | Language bias, cultural sensitivity, contextual bias | API integration; cloud-based deployment | Content creation, NLP applications, writing assistance | Limited to text-based applications; relatively new in the market |

| IBM AI Fairness 360 | Offers a wide range of algorithms in a comprehensive toolkit | Demographic parity, equalized odds, individual fairness | Python and enterprise systems | Research, enterprise audits, detailed evaluations | Steep learning curve; requires technical expertise |

| Fairlearn | Open-source with flexibility and Microsoft support | Group fairness, individual fairness, counterfactual fairness | scikit-learn, Azure ML, Python ecosystem | Python projects, academic research | Limited enterprise support; demands machine learning knowledge |

| Google What-If Tool | Enables interactive visualisation and model exploration | Feature-based bias, counterfactual analysis, performance disparities | TensorFlow integration; cloud platforms; Jupyter notebooks | Model debugging, interactive analysis, education | Tied to Google ecosystem; limited offline functionality |

| Aequitas | Designed for audits with a focus on regulatory compliance | Algorithmic fairness, outcome equity, process fairness | Command line and Python-based web interface | Government agencies, compliance teams, policy research | Lacks real-time capabilities; geared toward academic use |

| Microsoft Fairness Dashboard | Enterprise-ready with an intuitive interface for stakeholders | Demographic parity, equalized odds, equal opportunity | Azure ML, scikit-learn, PyTorch, TensorFlow | Azure users, enterprise teams, regulatory compliance | Requires Azure ecosystem; subscription costs involved |

When selecting a tool, consider factors like cost, ease of use, and compatibility with your existing systems. Open-source tools like Fairlearn and Aequitas are budget-friendly but require technical know-how, while enterprise solutions such as IBM AI Fairness 360 and Microsoft Fairness Dashboard offer robust support but come with licensing fees.

The tools also vary in their data compatibility. For instance, Trinka AI excels in handling unstructured text, while the Google What-If Tool is tailored for TensorFlow models. Additionally, tools like Microsoft Fairness Dashboard and Aequitas provide reporting features that help organisations meet U.S. regulatory standards, making them ideal for compliance-focused teams. Understanding these distinctions will help you align your choice with your organisation's capabilities and objectives.

How to Implement These Tools

Rolling out AI bias detection tools isn't just about plugging in software; it’s about embedding them into your workflows in a way that aligns with U.S. regulations. To achieve this, you’ll need a well-structured approach that integrates these tools into your AI development and compliance processes. Here’s how to do it effectively.

Start with a Cross-Functional Team

The first step is building a team with diverse expertise. Bring together data scientists, ethicists, domain experts, and business leaders. Include representatives from compliance, human resources, IT, legal, and engineering teams. This mix ensures you address both technical and regulatory aspects of bias detection. A well-rounded team helps uncover blind spots and ensures your efforts align with legal requirements and technical goals [7].

Create an AI Inventory

Next, take stock of all your AI systems. Document each system’s purpose, data sources, and how decisions are made. Think of this as similar to mapping out data privacy processes. Keep this inventory updated whenever you introduce new tools. This documentation will be critical for internal audits and regulatory compliance.

Establish Regular Audits and Continuous Monitoring

Bennett advises, “Organizations may want to consider comprehensive AI audits at least annually if not quarterly, with targeted reviews triggered by new AI tool implementations, regulatory changes, or identified compliance issues” [8]. Schedule regular audits using your bias detection tools, and complement these with continuous monitoring. Track metrics like bias across demographic groups, accuracy rates, and user satisfaction scores. This proactive monitoring helps catch issues before they escalate into problems for users or compliance violations.

Document Everything Thoroughly

As Bennett highlights, “This internal documentation offers clarity to relevant stakeholders, supports audit activity, and serves as a valuable - and often statutorily required - resource if external regulators inquire into the organization's AI use” [8]. Keep detailed records of your data sources, AI model parameters, training methods, and any steps taken to address detected bias. If you rely on third-party AI tools, make sure to obtain and keep their documentation as well. This shows that you’ve done your due diligence in preventing bias.

Align with U.S. Regulatory Requirements

Stay up-to-date with local, state, and federal AI laws. For instance, New York City’s Local Law 144 mandates bias audits for automated employment decision tools, while Illinois’s House Bill 3773 focuses on AI in hiring processes. If your organisation operates in multiple states, ensure your tools meet all jurisdiction-specific rules. Make sure your tools can generate reports required by regulators.

Create Feedback Mechanisms

Set up channels for employees and users to report AI-related concerns. These feedback systems can act as an early warning for bias issues that automated tools might miss. Establish clear procedures for investigating concerns and conducting additional bias assessments when needed. Use this feedback to refine your tools and improve their configuration over time.

Train Your Teams

Your team needs to understand both the strengths and limitations of your bias detection tools. Provide training on ethical AI principles and establish clear guidelines for every step of AI model development. Teach team members how to interpret bias detection results, when to escalate issues, and how to document corrective actions thoroughly.

Conclusion

Detecting bias in AI systems isn’t just a nice-to-have for U.S. businesses - it’s a must. With regulators paying closer attention to fairness in AI, companies that fail to address bias could face hefty fines, legal troubles, and even damage to their reputation.

The tools we’ve discussed offer practical ways to spot and reduce bias before it harms users or violates compliance standards. But identifying these tools is only the first step. The real challenge lies in putting them to work effectively.

To implement these tools successfully, businesses need to weave them into their development processes. This means scheduling regular audits, creating thorough documentation, and assembling teams that understand both the technical and regulatory aspects of AI. The systems you set up now will determine how prepared you are to handle future scrutiny.

For companies seeking extra guidance, technical due diligence or fractional CTO services - like those offered by Metamindz - can make a big difference. These experts can help you choose the right tools, stick to timelines, and stay compliant, all while keeping your broader goals on track.

Investing in bias detection early can save your organisation from much bigger headaches down the line. Yes, it requires time and resources, but the alternative - facing fines, losing customer trust, or tarnishing your reputation - can cost far more.

Choose your tools wisely, build strong systems, and address bias before it becomes a problem. It’s not just about avoiding a crisis - it’s about building trust and staying ahead.

FAQs

How do AI bias detection tools help businesses comply with U.S. laws like the EEOC and FCRA?

AI bias detection tools play a crucial role in helping businesses comply with U.S. laws like the Equal Employment Opportunity Commission (EEOC) guidelines and the Fair Credit Reporting Act (FCRA). These tools are designed to identify and address biases in AI-driven decision-making processes, such as hiring practices or credit evaluations.

They typically perform routine audits to uncover potential discriminatory patterns, ensure that proper consent and disclosures are in place, and align with anti-discrimination regulations. By tackling bias head-on, companies not only promote fairness but also lower their legal risks while staying in line with essential federal requirements.

What are the main differences between open-source and enterprise AI bias detection tools, and how do these affect implementation?

Open-source AI bias detection tools stand out for their transparency and the ability to be tailored to specific needs. Developers can dive into the code, tweak algorithms, and adapt them as needed. Plus, these tools are often more budget-friendly and can run on local systems, making them a great choice for projects that thrive on community contributions and flexibility.

In contrast, enterprise solutions shine in areas like security, built-in support, and reliability - key factors for organisations focused on compliance and scaling up. That said, they tend to come with a higher price tag and less visibility into how they work, which can also mean dedicating more resources to set up and maintain them. Deciding between the two boils down to your project’s priorities, budget constraints, and the balance you need between control and ease of use.

What are the best ways to integrate AI bias detection tools into existing workflows for ongoing monitoring and improvement?

To successfully integrate AI bias detection tools into your workflows, it's crucial to implement continuous monitoring systems. These systems should regularly evaluate your AI models for bias and connect directly with your existing data and operational platforms. This integration ensures smooth oversight and enables real-time detection of potential issues. Automated alerts can also play a key role by flagging biases early, giving you the opportunity to address them promptly.

Incorporating explainable AI (XAI) and fairness metrics can offer valuable insights into how your models reach decisions. These tools not only make bias identification more straightforward but also support long-term improvements. Over time, they can help your organization align with ethical and regulatory standards. By embedding these strategies into your workflows, you can build AI systems that are both more reliable and equitable.